With Mark Zuckerberg currently standing trial, for both Facebook and the entire tech industry’s past decade, we have to take a moment and ask: but how could we have done better? How can we move forward from here?

While media seems to be focused on Zuckerberg’s potential resignation and the DOW dropping in response to Facebook coming under fire for oversharing information through a Cambridge Analytica built app, we in tech should take a moment to consider the implications on the work we do and how we do it.

Revenue models aside for a moment, what could an ethical privacy and information future look like in the industry? It is an important question to consider at this pivotal moment, not only because the laws are likely to change, but also because, at this point in time, there really isn’t an industry that isn’t touched by software — be that healthcare, government, ecommerce or education.

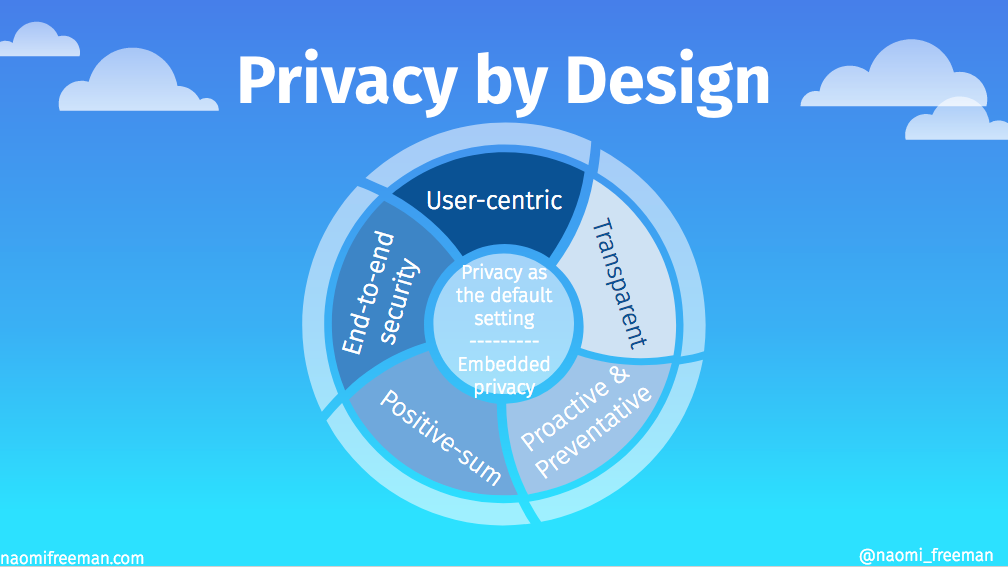

Privacy by design comes out of a Canadian healthcare tradition of fair information access and sharing. Long before the digital age, Canadian healthcare had strict privacy and information sharing policies in place, which regulate everything from what kind of data can be stored to how it can be stored and how long it can be stored before its lifecycle has expired and it needs to be destroyed.

The policies can be followed down to the most granular of potential actions. You can’t, for example, in a children’s hospital, store data in a non-medical volunteer database about the Committee a child may be volunteering on or part of, because it could be possible to work backwards from there to put together the type of volunteer work they do and their potential medical diagnoses.

Born from this tradition and policies came the idea of “privacy by design” as data started to go digital.

User-centric

User-centric in this case means elevating the users’ interests above all else.

This may seem like a radical concept from the Silicon Valley perspective, where monetizing users is all about selling information and data.

Outside of Silicon Valley, however, are all kinds of applications and websites that will demand a user-centric approach to data protection and privacy. Healthcare is one of the first examples that springs to mind. Ethically mandated to protect their clients even before the digital age, many healthcare organizations have in fact moved this kind of duty of care to privacy and protection of their clients into legal policy for the digital age.

Why should the Valley care when it’s not managing sensitive information?

Because all information is sensitive. What seems like innocuous data for one person could be a potentially life-threatening breach for another.

Transparent

This is transparency about policies of keeping data, as well as external verification that the project build is going along according to stated promises.

The first part is about transparency to users. We should notify users before a privacy change occurs. What if they don’t like it? Well, they’ll probably leave. Staying user-centric, we have to remember the user has a right to refuse to participate in your information and data-sharing practices. When did we begin to assume a CEO and company’s interests were of greater value than any individual’s? When did we begin to feel okay with the idea that a CEO and private company had a greater right and better knowledge than ourselves to share our data?

Let’s look at a concrete example, using Facebook itself. Imagine you read a news article saying Facebook has already given access to local police services to better monitor persons on Facebook. This is the first anyone has heard of this, so the change has already been implemented without notifying users and has now been published in the media.

Your first thought might be: “Oh, that’s wonderful. Another way to reduce crime. I understand where that idea came from and I think it’s a good idea.”

Now stop and consider this for a moment. In some judicial areas, it is still a criminal offence to attempt suicide, whether the act is seen through or not. So say a 16 year old publishes a post about how depressed they are, and the next day publishes a post indicating they are likely to take their own life.

Training in suicide prevention says that, if a person is posting such content, it is serious and also a likely cry for help and a reach out to try and connect with others in their network. However, if you have police with free access to Facebook content, the police would be within their right to punish this person to the full extent of the law.

A one-time incident may not be predictable for a particular person, but for persons living with chronic mental illnesses, if Facebook was about to implement such a policy and notified all users before it happened, do you think those persons would feel safe continuing to use the platform? Why shouldn’t it be their right to revoke access to themselves and their information?

The second part of openness and transparency is related to how things are built. While I was leading development of a prototype build for Toronto Community House (a subsidized housing provider) there was an expectation that the team running the project would publish frequent reports about the successes and challenges encountered along the project. The reports had to include budget, team members, a note on where the project was along the milestone timeline and whether that was on time or not. We would also note if we were talking to any new stakeholders or other 3rd parties.

Finally, this information is stored and available for external verification — be that an overseer or an auditor or an external secure party hired to check how things are going.

This is a government transparency and openness standard, but I think we would do well to have similar transparency with our builds outside of government and other institutions.

End-to-end security

End-to-end security means ensuring security throughout the lifecycle of the data. Core to this is the idea that data has a lifecycle. In a Canadian hospital, for example, this means destruction of records after 7 years. In other contexts it’s different, but there is a right to data destruction. Along the way, the data should be appropriately encrypted and stored. In the final stage of its life, data should be destroyed wholly, completely and in a timely manner.

Security should be in place at every single point along the data’s lifecycle, from collection to storage to how it is transported.

Positive-sum

The positive-sum directive is all about creating win-win situations rather than trade-offs. Some of the most fun I have in creating impact projects is sweeping away dichotomies. We can do all the things. Seriously. We just need to get creative. That’s why we’re paid the big bucks, right?

In traditional Valley models of thinking around these issues at the moment, there is more of a sum-zero mindset: we can have privacy or we can have security. This is simply not true. There are ways to navigate these questions without these major tradeoffs happening.

The idea is that, overall, everyone is going to have a win, not one side or the other. In current models, it seems to be much more sum-zero, in favour of the company: the company gets $1000, the user gets stalked because their location has been released. Doesn’t really seem fair, does it? We’re going to have to adjust our models so that our users are safe — whether that’s because users will want to leave and this hurts the revenue model, or a law is enacted to ensure we treat people better.

Besides — don’t you want better privacy and protection for your own data?

Proactive & Preventative

Being proactive and preventative is all about coming from a defensive position while building technology. You want to be looking through the lens of risk-aversion. Of course we want to move fast and innovate, and we also need to think first: how could this be misused? We often look at our ideal user and their pathway, and very rarely stop to look at the other side of the equation. Who is the worst possible user and how will this benefit them? What could they achieve.

We want to anticipate potential breaches and ensure they never occur. What could happen while digitizing a housing repairs system? Suddenly you have the exact location of broken windows, doors and locks available online. How do you protect the person whose data that is? It’s not much use to a social housing resident if you fix a data vulnerability after their TV has been stolen.

In the housing repairs system we built, a lot of it was about collecting the minimal amount of data possible for use, separating information into different streams and APIs and encrypting information (well). We also changed the database structure to a less-traditional set-up, which helped separate data.

There are serious, real-world consequences to not thinking from a defensive position.

Privacy as the Default Setting

The user should be able to log in to the system, never click anything and their data will be safe. Privacy as default is not opt-in. It is privacy as a standard.

The idea is that no consent-based action should have to be taken for a user to have full security. This is radically different from the current Valley model, where you might have to go into 2 or 3 menus to find a checkbox to protect your privacy. Frequently this is worded as “No, I don’t want this feature” rather than “Yes, please protect me”, which is, in and of itself, not user-centric.

An example could be location-sharing on a photo-sharing app. In many cases, companies are just turning this feature on, leaving users to catch up and find out how to turn it off, if they find out it’s on at all. A few years ago, I was chatting with a friend late at night. They said they had to go do something in the kitchen. Surprisingly to me, I watched them leave their house, go to an alcohol store, and return to their house, on a tiny map in our chat. I asked if they had gone to buy alcohol, and they said no. I laughed at the whole situation at the time, but the truth is they had no idea I could sit there and watch them move about. With privacy as the default, this would never happen.

In a privacy as default situation, the user would be notified of potential changes about to occur on the platform. The changes would be in plain language, with short bullet points explaining what the feature is and how it will impact them. From there, a user would be notified the feature has come online and be asked if they would like to participate. If they don’t click anything, their experience remains identical to how it was before the change was implemented.

Embedded Privacy

Privacy is embedded at the core of the design of the app. The system is built for privacy. Privacy isn’t a gadget to be added on at the end. Following design-thinking practice, it’s about designing everything for privacy and security — from the infrastructure to the business practices to the systems.

In this model, privacy becomes a core functionality.

The embedded privacy model also encourages thinking about “what if”, not just implementing features, head-down. If you can play the what if game before you start building, a large number of possibilities for misuse of data will become apparent. The idea is to build a safe zone all the way out to those seemingly ridiculous scenarios, rather than just building protection around some core features and functionality.

Summary

It may seem impossible to predict all of the possible ways your data policies could snowball into something that was never intended. One of the best ways to work towards harm-reduction in privacy and information sharing is to be open and transparent, putting the power in your users’ hands and being sure you embed privacy from the ground up, through every single layer of your application.

Empowering your users can help drive your builds.

This can be a scary prospect, because they might tell you things you really don’t like. Our culture is drenched in start-up and LEAN culture though. Go out and chat to your users. Send them accessible information about their privacy, and their actions are going to give you the feedback you need to move forward.

Privacy by design can become your unique advantage and drive revenue in the long-term.

No comments:

Post a Comment